Use Airflow to Project Confidence in Your Data

A key tenet of Raybeam’s mission whenever we start at a new client is to deliver value quickly. This value often takes the form of marketing or product insights that we derive by combing through large amounts of data. As Raybeam is a software engineering firm specializing in data and analytics, we tend to work on the data backend and the analytical frontend simultaneously.

Is the data ready?

The most furious pace of engineering work tends to happen at the beginning of a project as we update or migrate the data and processing architecture. As data quality improves, we’re able to use it to deliver more and more insights. While we do pride ourselves on delivering sustained quality at a quick pace, not everything can happen at once. Inevitably, this leads to one of the most asked questions in analytics:

“Is the data ready?” Or more accurately, “Can I be confident that this data is correct?”

Modern data pipelines often consist of hundreds of external sources sending millions or billions of events per day. A good pipeline can collect, clean, process and serve that data to users quickly and on a regular schedule. When a customer marketing manager checks their dashboard in the morning, it should reflect the current state of customer marketing. There should be no surprises. If the data is meant to be less than 2 hours old, it should be.

But what happens if one source of data hasn’t been updated? It could be one of a million reasons; an API token expired, the source was late, or devops had an unscheduled outage. Anyone in a data organization has had this happen to them, probably multiple times. But a marketing manager, why should they know about this? They want to know one thing:

“Can I be confident that this data is correct?”

Trust in data, like all trust, takes a long time to build and a moment to break. Once broken, you must start from scratch. Broken trust doesn’t just mean the data is wrong. It means the data is wrong and no one was notified. It’s an important distinction. It’s not possible to control all of your upstream sources so that data is never wrong or out-of-date. But it is possible to notify users they make a decision based on incorrect or outdated data.

Introducing the Raybeam’s status plugin

We wanted to create something that is simple for analysts or data engineers to incorporate into their workflow but also effective for reporting status to those colleagues that might not be as familiar with the underlying data process.

That’s why we’ve created the rb_status_plugin for Airflow.

For most business users, it should answer the question, “Is my data ready?” quickly. If the answer is no, it should give the user some way to follow up.

For analysts or engineers, it should fit seamlessly into their Airflow data system and be quick and easy to set up.

Our plugin installs like any other Airflow plugin. There are detailed instructions for a variety of Airflow setups in the README.

Once installed, the rb_status_plugin is incredibly easy to use. It allows you to choose a set of Airflow tasks and assign their result to a report. That report can be scheduled the same way an Airflow DAG is scheduled. In fact, that’s exactly what it is. The plugin will create a DAG to run it’s checks based on the tasks you care about.

An example

The tasks can be anything, but we’ve found it works best with data quality checks. We tend to run many Pass/Fail tests against our data pipeline during and after its execution. In a very simple example, we could have a set of tasks that verifies:

Our dataset is less than 2 hours old

Visitors and sales are positively correlated

The total number of rows added to table X is within 1 standard deviation of the last 2 weeks

We could bundle these tasks into a report and schedule it for every morning at 5AM. Now, say this dataset contains all of the data used in the marketing acquisition team’s dashboards. We could make sure all of the marketing managers on that team were subscribed to this report. Each morning, when they log on, they’ll see an email showing them the status of the data that day. It would, presumably, be green the majority of the time. For the days where there was a problem, however, they would see that the data is not ready and have a quick way to follow up.

Our primary goal was to make it both simple to create reports and simple to use the reports. Our philosophy is to make small, simple tools that work well. Obviously there are many other parts that go into providing confidence, including scheduling, data quality testing, logging and alerting just to name a few. We build tools to handle those parts of the process as well. We’re working on a plan to make it easy for all of these tools to work together. For now though, we think we’ve created a simple tool that will make our lives and our clients’ lives, just a little easier. Maybe it will help you too.

How it works

We’re going to walk through a couple of use cases to show how we’re using this plugin.

First, let’s assume we’re working with a social media marketing manager. They handle all social channels and their overriding goal is to make sure they’re getting the best ROI for their budget.

Let’s review a DAG that might be running for this type of work

I’ve just made this up but you can see that I’m loading data from some social channels, joining it, running a few tests and then building some downstream data sets.

From the green outlines, you can tell they’re all passing right now.

Creating a report

Now, let’s pretend I’m an analyst on the social media marketing team and I want to create a report so the people I support can feel confident about the data without asking me every day.

The rb_status_plugin adds a menu item in Airflow named “Status”. You have two options in the drop down. You can view all current statuses in the “Status Page” or you can go to the current report list.

We’re going to click into the “Status Page” in this example.

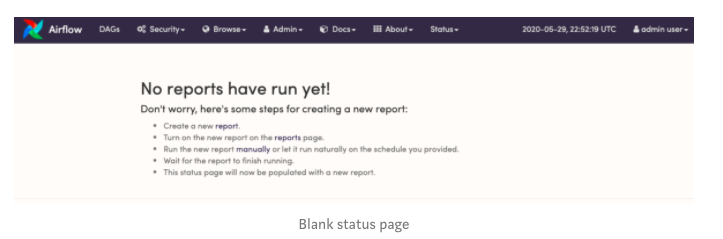

Empty Status Page

We haven’t created any reports yet, so we’ll be greeted with some helpful options and instructions.

We want to create a new report, so let’s click on “Create a new report”

New report

We’re taken to a straightforward page for adding a report.

You can name and describe the report so that anyone receiving it knows what the status relates to.

The owner and owner email will automatically be added to the list of subscribers. Emails for the main audience of this status report should be added in the “Subscribers” fields. In our example, that would be anyone in the social media marketing team.

New report : Scheduling

For scheduling, the options follow Airflow’s scheduling options. You can choose “None” if you’d like to manually trigger the report. This is good for when you’re first testing it. Otherwise, you can choose daily, weekly or, for more customized cases, cron notation.

New report : Tests

Now we’re going to choose the tests that will combine to determine the status of the report. Tests, in our case, could be any task in Airflow. A lot of times, that will be data quality tests. However, the rb_status_plugin library can use any Airflow task.

You’ll notice we try to make this easier by providing a type-ahead search and dropdown.

Something to note, if a task has not yet been run, it won’t be in Airflow’s database so it won’t show up in the dropdown. That means that you need to run the specific task at least once manually, or run the entire DAG that it’s in at least once.

Running the report

If you go back to the status page, you’ll see a warning.

Reports are actually DAGs behind the scenes so they need to run.

Since we set the schedule to “None”, it’s not going to run on its own. We’ll need to manually trigger it.

You could trigger it like any other DAG, by clicking the “play” button in the DAG list. We also provide a “run” and “delete” option in the report list UI.

Note that all DAGs are created in the “Off” state for Airflow. We at Raybeam like to follow conventions of the underlying tool as much as possible, so reports will also be “Off” after creation. You’ll need to click on the “On/Off” toggle either in the report list or DAG list to turn the report on and run it.

Running the report

Once the report is “On” and you’ve run it, you will need to wait until it’s picked up and run by Airflow. It should finish quickly since it’s mainly checking Airflow’s own underlying database.

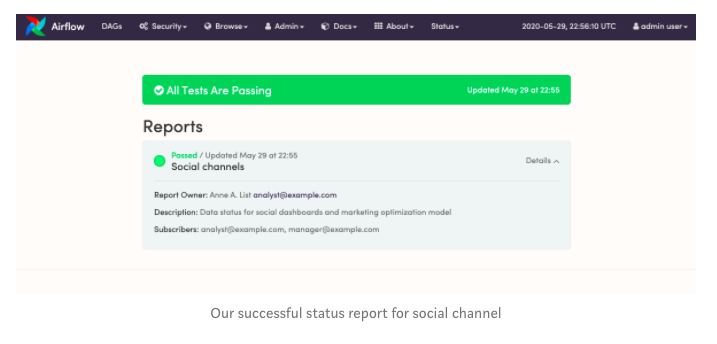

Once the report DAG finishes, you’ll be able to see it in the Status page

It looks like all tests have passed and we have a successful status. If you’ve set up email, all of your subscribers will also receive the status in their inbox

Clicking on “details” will take the user back to the Airflow status page.

Schedule the test

Now is a good time to go back and schedule this report to go out on a regular basis. That way you can keep your users up to date automatically.

Second report : Failing tests

Let’s create a second report. For this one, I’m going to pretend that I’m on the data engineering team. I don’t know exactly how the business is using every piece of data, but I do know that all of my external partners need to be loaded correctly, every day, on time.

For this example, I’m going to have our Facebook load fail. Maybe someone didn’t check and the API key expired.

In this particular case, we’ve set up the DAG to continue, even if a particular job fails. That’s because we’ve set up other tests throughout that DAG that tell us if the final data set is valid.

Maybe, for the social marketing team, they can go one day without new Facebook data. Yeah, yeah, I know, pretty unlikely but it’s an example.

Create the loading report

For this report, I’m going to include all of the load tasks. In an actual Airflow instance, maybe I have load tasks scattered throughout a bunch of DAGs. That’s fine! The rb_status_plugin views everything at a task level. You can add tasks to a report, no matter which DAG they’re in.

Now you can see our report in the report list. We’ll need to turn it on and run it.

Failed status report

Well, as you can see, our status report failed because the Facebook loading job failed.

You’ll also notice that the social marketing report is still listed. We include a summary note at the top that “Some tests are failing”. If it’s red, you know to check the specific reports. If it’s green, you know the entire system is good to go.

The failed task is listed in the details and it links back to the job log for that task run. You can easily use the status report to click back into the task and find the underlying problem.

And once again, any subscribers will receive an email with the current status of the report.

Conclusion

By now, you’ve been thoroughly introduced to the new rb_status_plugin. We discussed the need for it and have gone through a couple of use cases.

Try it out and give us feedback.

Astronomer and Enterprise Airflow

You may have noticed that our screenshots look a little different than your Airflow setup. That’s because we use Astronomer when we have the choice. Astronomer is the easiest way to run, develop and deploy Airflow that we’ve found.

We use their docker image for local development. Scaling to an enterprise capable deployment can be done in minutes. Give them a try if you haven’t yet.